|

If you ask the present-day students of information sciences what do

they imagine under the term computer "program" or "application", only

a few of them will understand it as a single executable file from

which the operating system process code is set up. The meaning of the

term "application" changes with computer connection into a worldwide

network, with the coming of mobile devices such as PDA or "smart"

mobile phones, with the stadardization process of communication

protocols and application layers. Today, an "application" is usualy a

set of components and scripts that work on both the server and on the

client browsers, which communicate with a database engine and provide

information to clients by the means of an HTTP server. That way even

services like phone book search, finding a place on a map, bus or

train connection search, etc. get the shape of a WWW application. This

concept becomes popular even outside the wide-spreading internet

environment. All existing tools, knowledge and experience of

programmers can be applied also for LAN (intranet) applications. For

example an accounting system and a storage agenda of the company can

get the same shape. Even on a local computer there is a lot of

applications that use the same concept for user interface — the

HTML pages. This tendency is going to strenghten with the upcoming

advancement of various information technology fields, such as wireless

networks, portable computers with a very low power consumption,

etc.

The application of computers in industry usually shows resistance

against the inflow of various state-of-the-art technologies and open

standards (this resistance is often well excused by the demands for

maximal reliability and robustness, on the other hand as often is the

main cause the reluctancy to study anyting new, even though the new

technology makes an application significantly more beneficial and

makes the solution cheaper). Nevertheless the profitableness of

internet and intranet solutions for a great number of applications is

so clear, that they begin to come even to this conservative sector.

A WWW browser is now present on every computer and every computer

in the bussines area is connected to the company's network. The

possibility to make the visualisation and maybe even the control of

industrial process accessible from any computer becomes a great

attraction for the customers.

Of course it is not possible to await the same potential from the

visualisation in a WWW browser environment as from the application

program working on a local computer. We are restricted by the internet

standards of document format and pictures, the chances of programming

(scripting), security standards, etc. Further restrictions are related

to for example system response time for communication with PLC or data

acquisition units, etc. While a locally running application must be

able to control the communication in realtime and if necessary react

on delays or failures of communication, it would be almost impossible

to realize such a function (with a comparable response time) in the

WWW browser environment. There is also one rule — the bigger the

number of clients able to access the application is (for example using

different browser types on different operating systems), the more

restrictions will be placed on the application. An extrem could be for

example an application working on miniature mobile phone displays. We

have to get along with elementary HTML code and one image format

only.

The presence of built-in HTTP server that makes the application

accessible to clients through WWW browsers was one of the reasons for

naming the program system for rapid industrial visualisation and

control application development Control Web. Although the HTTP server

in the first version of Control Web 3 was suitable for smaller

intranet applications only, the growing customer demands lead to

significant functionality enhancements, simplification of program

control, HTTP protocol header access, increase of robustness (for

example detection and fending off of the efforts for gaining the

control of the server of the type "buffer overrun"), etc. All these

inovations lead to the version of the Control Web 5 HTTP server

capable of not only working as a WWW gateway to the technological

processs, but also as a company WWW server with complete editorial

system capable of supporting a great number of clients. Because static

"manually" created HTML pages are already a part of the history, the

HTTP server alone would not be enough, but it needs more components of

the Control Web system, especially the SQL database access. The

powerful Control Web scripting language is essential during the

creation of such web applications.

HTML pages generation

WWW pages access is considered an absolute standard of computer

literacy today — it is impossible to miss a WWW browser in

any computer usage course. However, it is not as widely known what

is actually hidden behind the WWW service, even though the

principle is very simple.

Two computers must use the same communication language to be

able to exchange data. The protocol used for WWW service

communication between a client and a server is called HTTP(

Hyper-Text Transfer Protocol). There are also some other

protocols, for example SMTP for electronic mail transfer, FTP for

file transfer, etc. But do not be mistaken, HTTP protocol can be

used for any kind of data transfer, not only hypertext. It can be

used for transfering images, executable binary files, compressed

archive files, etc. Because the WWW service server communicates

with the clients using HTTP protocol, it is sometimes called an

HTTP server.

If we pass over the technical details of making a network

contact on the HTTP server service port, the whole magic of WWW

consists of sending HTTP requests from a client application to a

server to which the server sends replies afterwards. The basic

request is the one of the type GET - get data. Which data is the

client interested in is determined by URL (Universal Resource

Locator). URL is very similar to the function of a filename on a

local computer, but in the WWW environment - it denominates a

source of the data. However, URL can carry additional information

and paramenters to the server.

How to cope with the data is the concern of the client

application (WWW browser). If the server provides an HTML file

(text file formatted according to HTML rules - Hyper-Text Markup

Language), the browser will format it in accordance with tags

contained in the file (these tags define for example headlines,

subdivision of paragraphs, etc.) . HTML page can also contain

additional data, for example pictures. The pictures are not

included directly in the HTML file text, there is only a reference

to them in URL form. The client then sends another request to the

server with the specified URL and the server replies with a block

of data, this time it contains the desired picture. The returned

picture is then displayed within the page.

The similarity of URL with a filename may lead to a concept,

that the HTTP server only translates the received URL to a name of

a local file and this file is returned to the client. It works

truly that way in the case of a static WWW application - all HTML

documents, pictures and other files that the application consists

of are ready on the disk and the HTTP reads them and sends them to

clients. A disadvantage of such an application is a very

tough maintenance. Any change in documents interconnected with

hyper-links is very difficult and time-consuming. Any larger WWW

application is not constructed that way simply because of an

absolute inability to maintain it. The solution is obvious -

instead of a static structure of files there is an application

running on the side of the server, that creates the content of the

pages algorithmicaly.

As an example we can consider the introductory page of a WWW

application, which should contain links to all articles inserted

during the last week. Manual maintenance of such a page would

consist of continual watching of newly inserted articles and

adding links to them to the HTML file representing the

introductory page and deleting old links. On the contrary a page

created by a procedure code would be automatically updated. The

procedure would request all pages inserted in the last 7 days and

algorithmicaly create an HTML document, which would be sent to a

client. The client part of the application is not capable of

differentiating how was the received HTML document created, it

even does not need that information. If the document complies with

the HTML rules, it can be correctly displayed. That is the whole

magic of dynamic WWW application.

Let's mention an example of dynamic creation of root page

in Control Web 5 system as an illustration:

httpd WebServer;

pages

item

path = '/';

call = GenerateIndex();

end_item;

end_pages;

procedure GenerateIndex();

begin

PutText('<html><head><title>Demonstration</title></head>');

PutText('<body>A dynamicaly generated page</body></html>');

end_procedure;

end_httpd;

When the WWW browser sends a request with URL ‘/’ (base or so

called index page), the server knows that this file is not to be

looked for on the disk, but it should call a procedure called

GenerateIndex to create it. The code of this

procedure creates the text of this page by consecutive calling the

PutText procedure. The WWW browser receives this HTML

document:

<html><head><title>Demonstration</title></head>

<body>A dynamicaly generated page</body></html>

As it was said before, the browser is not capable of finding

out, whether this document was saved as a file on a disk or

created algorithmicaly.

The dynamic generation does not bring any advantage in the case

of a simple page. The only advantage may be the fact that the

whole WWW application can work without any disk access. But

let's consider the following procedure GenerateIndex:

procedure GenerateIndex();

var

i : integer;

begin

access_count = access_count + 1;

PutText('<html><head><title>A dynamic page</title></head><body>');

if display_table then

PutText('<table border="1" width="30%" align="center">');

for i = 1 to lines do

PutText( '<tr><td> line </td><td> ' + str( i, 10 ) +

' </td></tr>' );

end; (* for *)

PutText('</table> ');

else

PutText('<center><ul>');

for i = 1 to lines do

PutText( '<li> line ' + str( i, 10 ) + '</li>' );

end; (* for *)

PutText('</ul></center>');

end;

PutText('<hr><b>Page generated on ' + date.TodayToString() +

' in ' + date.NowToString() + '.<br>Access count: ' +

str( access_count, 10 ) + '</b>');

PutText('</body></html>');

end_procedure;

In this case is the advantage of dynamic generation obvious. In

the first place a single procedure creates HTML text corresponding

to a table or a list based on a condition. Furthermore an

important thing to highlight is, that the length of the page is

not given beforehand, but it depends on the value of the variable

lines. At the end of the page there is an access

counter, current date and time. Every client always receives a

different HTML document from the server (they differ at least in

the number at the access counter and also in the current date and

time), even when asked for exactly the same page.

An application or just viewing documents?

The origin of the WWW service lies in a system, that makes

documents accessible to scientists in the European Center for

Nuclear Research - CERN. The possibility to insert a hyperlink in

another document in the text (this is where the name hyper-text

comes from) transforms ordinary text documents into a simple

application, that responds to user requests - clicking on a link

results in loading a new document in the browser. If there were no

other possibilities except for links to other files, it would not

be appropriate to talk about an application environment. However,

the popularity of WWW caused a rapid evolution of this standard

and step by step many new possibilities were added, such as

pictures inserted in the text, more precise formating and a chance

to create forms the users could use to enter data for the

application. But the development process of the HTML standard

didn't stop and now it contains also an option to write scripts

(scripts in an HTML page represent a program code that is executed

in the WWW browser), cascade styles, dynamic HTML, etc. So today

we can say that HTML is a relatively rich and powerful application

environment.

The definition of the HTTP protocol contains not only

the data reading request from a server (method GET), but also a

data writing request to the server (method PUT). However this

method is not used in praxis, because it is not supported by the

client applications and mostly it brings a lot of security risks

- in principle it is unthought-of that the clients were able to

save files on a server to a given URL. That is why the sending

process from a client to a server is restricted to two HTTP

protocol methods:

Let's notice that the HTTP protocol does not define any

mechanisms for data processing. It depends solely on the server

application how does it cope with the data.

Even though the method POST was designed for sending data from

HTML forms, it was widened with a possibility of sending whole

files. In contrast to the method PUT the URL does not define where

to save that file , but rather which part of the server

application should process the file. Again, how the file is

treated is a task for the implementation of the server application

- it could be for example saved in a database, etc.

The Control Web system offers a number of ways how to

process the received data from a client:

The easiest way is to define the relationship between the

of control elements on the HTML page and the data elements in

the application. If the user seds data from the form to the

application, correspondent data elements will get the values

filled-in in the form. If a part or the whole HTML page consists of procedure

code, the programmer will be able through using GetURLData procedure

get a string that contains the names and values of the control

elements from the form. It is up to the programmer to get the

particular values from this string. Because GetURLData can be called only from a

procedure that processes the GET request, data sent by the

method POST can be caught by a procedure OnFormData.

This procedure is called always when a server receives data from

a form, it does not matter whether it was by the means of the

method GET or POST. If an application uses an extension of the method POST

that allows sending whole files to the server, it can make use

of an event procedure OnPostFile.

Here we can finally explain where did the values of the

variables display_table a lines in the

previous example come from. The HTML from contains control

elements with such names and if the form is sent to the server,

the names and values of these control elements will be inserted in

the URL:

http://localhost/default.htm?display_format=true&line_count=10

In the HTTP server it is enough to define the mapping between

the control elements names and the names of variables in the

application:

httpd WebServer;

static

lines : integer;

display_table : boolean;

access_count : longint;

end_static;

forms

item

id = 'line_count';

output = lines;

end_item;

item

id = 'display_format';

output = display_table;

end_item;

end_forms;

...

end_httpd;

Problems could be caused by an ambiguity of a definition of the

server's behaviour during a reply to the method POST in the

HTTP standard. That is to say that the URL is a part of the POST,

defined by an atribute ACTION in a form definition in an HTML

document. However, according to the definition, this URL is

intended for an identification of an entity that is related to the

data being sent within the POST. It is not specified whether the

data referenced in the URL within the POST should be returned

(like after GET) or not. Experience showed that optimal server

behaviour after a POST, that makes the development process of WWW

applications as easy as possible, is a behaviour that mimics the

method GET. If the data referenced by the URL exist, they will be

returned with a code 200 OK, if they do not

exist, 404 Not Found is not returned (like

after GET), instead 204 No Content is

returned.

Optimalization of the data flow over a TCP/IP network

The mechanisms of memory buffers, where the data is temporarily

stored in the place of need and not always transfered from the

storage place, showed as a powerful method of making the work in

many technical and program systems much faster and more effective.

For example the processor cache allows it to work many times

faster than the memory speed, disk cache makes the effective

transfer rate faster, etc.

In the same fashion can the buffers of the HTTP protocol

significantly speed up WWW page access. WWW pages contain a lot of

static images that do not change for a long time and it would be

useless for the browser to download them always from the server.

That is why every browser stores a certain amount of documents and

pictures on a local disk, from which they can be loaded much

faster than from any IP network.

There are also specialized servers that work as a HTTP protocol

cache in the internet or intranet. If more than one client (for

example in a company computer network) do not access a remote

server directly, but rather through a proxy-server, then these

clients will be able to significantly speed up the WWW page

access. The first client causes the pages to be loaded in the

proxy-server, in the case of the other clients the proxy-server

just makes sure that the data on the original server were not

changed, and if they were not, he returns the data from its cache

instead of a lengthy download from the internet. Both mechanisms

(WWW browser cache and specialized proxy-servers) are very similar

and use very similar algorithms.

A key question in every system with cache memory is the

assurance of data consistency. If for example an image on a server

changes, it would be errorneous to display an image stored in a

local cache memory. However, the client has no other choice to

figure out whether the cache is actual or not than to make a

request to the server. With every data block (a data block is for

example an HTML document, a picture, etc.) that is transfered

through the HTTP protocol there is also an information about the

time of a last modification transfered with it. So that the client

knows how old is the document stored in its cache, it has only to

find out how old is the actual document on the server.

Because of that there is another method built-in the HTTP

protocol called HEAD. This method corresponds to the method GET

(it contains URL and other request data), but the client is

awaiting only a header of the data block as a reply. This header

contains also the information about the time of the last

modification, so that the client can decide whether to get the

data using the method GET, or use the data from

its cache.

The application of the request HEAD may save useless data

transfers, but if the document is not actual, it leads to the

transfer prolongation by one HEAD request and one reply. That is

why there is one more alternative way in the internet environment.

A client makes a request using the method GET, but there is a

header inserted in the protocol with an information for the server

that the data is to be sent only when they have been modified

since the last modification date in the cache. The server alone

decides whether the data are up-to-date and if there are newer

data available, it will directly reply. If not, it will send an

information to the client that the data were not modified. This

way ensures minimal network traffic and optimal data transfer. The

HTTP server of the Control Web system supports both optimalization

ways of data transfer.

The above-mentioned mechanisms are easily imaginable

with static documents saved as files. The moment of last

modification of every file is saved in a file system and the

HTTP server is able to use it. But if the argument is dynamicaly

generated, the situation will get more complicated:

A dynamicaly generated document has the most recent

modification moment set to the actual time. A client has

never an up-to-date version and always has to transfer the data

from the server. However, the dynamically generated page does not always

differ from the page gegenerated by the previous request. If the

algorithm does only decoding of the URL and returns data stored

in a database, it will be useless to set the moment of last

modification to the current date and time. The client can

through a procedure SetLastModified set the moment

of the last modification and the Control Web HTTP server

automatically returns the data to the client or reports that the

data were not modified. Although the power of the computer is

not the bottleneck on WWW servers, but rather the transfer line

bandwidth, the procedure that generates a page is able to figure

out the value of If-Modified-Since by

calling GetHeader and then decide whether it is necessary to

generate the page or not. By calling SetLastModified

it can set the date to equal to (or lesser than) the date in the

If-Modified-Since header, the server

answers with the code 304 Not Modified and

so it is not necessary to waste time by generating the page.

When the algorithm that generates the page only redirects

the data flow to a file by calling RedirectToFile,

the moment of the last modification is not set to the current

time, but the moment of the last modification of the

file.

Control Web is able to dynamicaly generate documents completely

without an influence of a user program. If, for example, we want

to sent actual shape of some virtual instrument, we can do that by

an easy mapping of a URL image to a visible virtual instrument in

an application:

httpd WebServer;

instruments

item

path = '/img1';

instrument = panel_energy;

end_item;

item

path = '/img2';

instrument = panel_water;

end_item;

...

end_instruments;

...

end_httpd;

Whenever there appears a link to an image "img1" on an HTML

page, the server will not search on a disk (a file with such a

name does not exist anyway), but it will draw an up-to-date shape

of an instrument called panel_energy. In this case is the moment

of the last modification always set to the actual time.

Control Web 5 as an HTTP server http://www.mii.cz

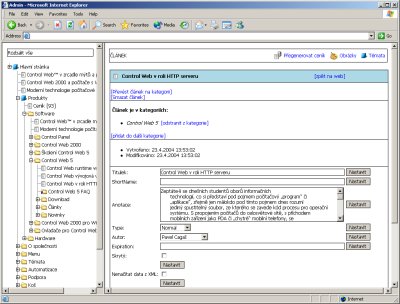

The great power of the HTTP server built-in in the Control Web

5 system is demostrated by an application developed for running on

the server http://www.mii.cz. This application contains not only

the "client" part that displays the data to the visitors of the

server (if you read this article on the server www.mii.cz, the

data were prepared for you by the Control Web), but also the

administration part that allows a comfortable administration of

the whole server.

The server application meets all modern information WWW

server requirements:

All texts are stored in the XML format that use a common

document type definition. Thus a unified and consistend

formatting of all pages is ensured. By changing the XML

transformation the appearance of all texts is also changed. That

way the server separates the content of the data from their

formatting. No data are stored statically. All the texts are

generated to the HTML format from the source XML files

dynamically during the application run. All data are stored in a SQL database. The content of the

data can be easily backed-up or replicated. The appearance of a page is defined algorithmically based

on a data structure. If there is for example a new product added

to the company supply, it will be enough to add its description

to the application and insert it in a corresponding category.

The annotation with links to a detailed description is

automatically inserted in the product page, eventually even in

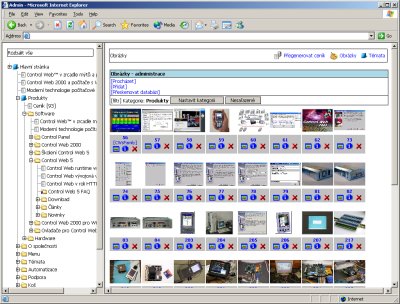

the news page, etc. The support of inserting images in the documents is

completely automated. The image index is stored in a database

and so a potential exchange of the images is very simple.

According to the image attributes there are automatically

generated large or small thumbnails, links to the

full-resolution image, etc. during the XML to HTML

transformation.

The creators of the content have an administrative interface at

their disposal. It allows them to change the structure of

categories and add or modify articles, descriptions, images, etc.

The whole interface works in a standard WWW browser. The access to

this interface can be restricted not only to a name and a

password, but also to specific IP addresses or IP network masks

due to the security.

Administrative interface for WWW page content

creators Basic operations on categories (switching-over the

subcategories, annotation editing, etc) are implemented using HTML

forms. However, the editing possibilities of HTML form control

elements are so restricted, that a comfort edtiting of longer

articles with more sophisticated formating is almost impossible.

That is why the system offers an option to download the source

form of the articles in the XML format to a local computer, where

it is possible to edit the article with any available XML editor.

After that it is possible to upload the XML to the server. The

server application checks the validity of links and the image

accessibility and eventually asks the user to enter the location

of images on the local computer, automatically downloads them to

the server and adds them to the database.

WWW server picture album The application on http://www.mii.cz/ shows only a small part

of the Control Web system capabilities targeted to the development of

distributed applications in internet and intranet environment for

clients using WWW browsers. However, the great number of other

areas, such as the development of distributed applications based

on "fat clients", shared and synchronized data sections, automated

DHTML application generation, advanced process 3D visualisation,

etc. greatly exceed the scope of this article.

|